This is quite a long blog post, much longer than I usually do but there is quite a lot to reflect on regarding the short course called the 12 Days of AI. It was mainly done for my own purposes but it might be useful for others…..

Why we wanted to do the 12DoAI

When ChatGPT 3 was released in November 2022 it immediately sent ripples of interest and unease across education and the HE sector. Whilst many where intrigued by its potential use the most common response was to focus on its ability to write students written assignment. They were constant reports on how ChatGPT could be used to pass university level assignments and what the response should be to this potential threat to academic integrity. My own institutions first response was to add an extra sentence to the academic misconduct policy explicitly stating that AI tools like ChatGPT should not be used and any student caught using it would be breaking this policy guidance. This was a very common response and was repeated at many other university. However, the sector moved quickly on and then started to produce staff guidance on how and when AI could be used in a teaching and learning context. The most comprehensive example of this guidance was produced by Kings College London, which I’m sure has influenced how other institutions how developed their own guidance.

At UAL the central Digital Learning Practice Team have taken a slightly different response. I think one of the reasons for this is that we are an arts based institution and a lot of the students work in based around visual and moving images. Only a relatively small part of the student’s assessment is based around high stakes written assessment. This means that a lot of the learning and assessment is based around creativity, playful learning and developing new ways of doing things in creative spaces and installations. Indeed, there is a long history of using machine learning and AI in creative spaces in art education in UK art schools. So our response has been to invite the different stakeholders into a ‘AI Conversation’ about the implication of AI for art education. We set up a weekly seminar where we invited guest speakers on AI to discuss their area of specialism regarding AI. We started this series with a discussion about AI and assessment and then looked several other topics such as, How students are using AI, AI and Libraries, AI and Art educations and wider issues such data bias built into the AI algorithms.

The AI Conversations series was great for starting that debate across the different art colleges at UAL about the implications of using AI in education but it soon became apparent that people wanted to know what AI tools were being used and how they could be used in their teaching. This was the main reason for setting up the 12 Days of AI ‘course’. It was to give an introduction to the main tools, followed by a short task and then encourage a debate about their usefulness and implications for using them in a teaching context.

Overview of the 12 DoAI

So what was the 12 days of AI (12DoAI)? It was online programme which, in 20 minutes a day over 12 days, got participants using a variety of different AI tools. It was aimed at teaching and learning staff who hadn’t used AI before or those that had experimented a little with some AI tools. I started writing the course with three objectives in mind:

- How to set up and use 12 AI tools

- How these tools could be used in a teaching and learning context

- Discussions about the potential and limitations of using these AI tools

The course was set up on a WordPress blog and began on Thursday 30th November and ended Friday 15th December 2023. Each day during the 12-day program, we released a new blog post at 10 am (or close to!) detailing a 20 minute task that had to be done using an AI tool, such as writing a prompt, summarising text or creating a video. Each blog post guided the learner through a different AI tool, offering custom tips and suggestions on how it can be applied effectively in a educational context. Once the task was complete we created a series of questions about the activity. The questions were aimed to get some reflection on the task and to critically look at some of the issues related to their use in a classroom setting.

Even though I’ve called the 12DoAI a ‘course’ – I use the term very broadly because it was aimed to run with very little direct intervention form myself or the other facilitators. There were no live sessions – all the tasks were asynchronous activities that course be done over the 12 days. In fact the blog is still ‘live’ so can be done at any time (in fact, we are still getting comments a month later). There was no formal summative assessment activities – only the formative discussion area in the blog comments section. Also, there was no individual recording of engagement on the programme. These are some of the things you would expect on a ‘course’ – so a better description would be something like a ‘online staff development learning space’ – which doesn’t ‘roll of the tongue’ so easily!

Sign ups:

By the end of the course we had over 1200 participants who had signed up for the course (note to myself – ask Hannah how many actually signed up). We advertised the course both internally at UAL and across the HE sector. Internally, the course was publicised on UAL’s intranet, across different Teams sites and through The Teaching and Learning Exchange’s twitter feed. Externally, it was advertised on the Associations for Learning Technology’s (ALT) newsletter and the Staff and Educational Development’s (SEDA) email list. Individually, I posted a blog post on my own blog site and through X and LinkedIn.

Evaluating the 12DoAI:

Feedback from Questionnaires:

We sent two questionnaires the 12DoAI participants, the first one half way through the course at day 6 and the second one on the last day of the course. The first questionnaire, the interim survey, was sent out to give us some immediate feedback so that we could make any changes to the course as we were running it. The second, end of course survey was to give us some overall feedback about the whole 12 days of the course.

Feedback from interim survey:

We asked 10 questions in total but I will just focus on the results of two questions. First, ‘What do you like or dislike about 12 Days of AI?’, this elicited several things they really liked:

- the structure of the course, “I love the format! Daily bite size tasks to easily allow staff to explore different tools with simple but thoughtful tasks”, “I like that it self-led can choose which time suits you best the variety of the tools”,

- the breath of the AI tools: “Like variety and when have more time will look at alternatives”, “Really like the diversity of tools being offered and the suggested prompts”,

- The interaction in the the blog discussion, “the comments after each task is great” and “its a good learning experience seeing others thoughts and experiences”

The main thing they disliked and this was mentioned many times was having to sign up to the different tools with their email address.

The second question I was interested in was ‘What improvements would you recommend?’ again I’ve just choosen three comments athat were fairly representative:

- “It’d be nice to have a synchronous component to it, like a meeting via Teams towards the end”.

- “More detailed user guides”

- “I’m probably spending about 40mins on each task, that could be just me but 20mins seems optimistic”

Feedback from end of course survey:

We only got 13 respondents from the end of course survey so I’m really not sure how representative their comment were. Some of the questions got similar responses to the the interim survey but one question I thought was particularly interesting was, Has the 12 days of AI increased your critical awareness of these tools? How do you evaluate these new tools for use in your practice or work? We got a variety of responces:

‘Very much so. It showed me far more sites / tools than I was aware of’. ‘I’d like to know more about data security of using those AI tools. Definitely need to check their data sharing policy before sign up’. ‘Yes, the 12 days of AI have increased my awareness of these range of tools. In terms of how I evaluate new tools: – ease of use – time to understand how to use, create with the tool – accessibility for a range of possible users and accessibility needs – the visual of it for users‘ ‘My critical awareness has been increased more by the AI conversations that UAL facilitates. The 12 days of AI were more for a quick trial of some tools; still very useful though!‘

This was one of the aims of the 12DOAI – to raise the critical awareness of these tools so it was good to see these responces.

Google Analytics

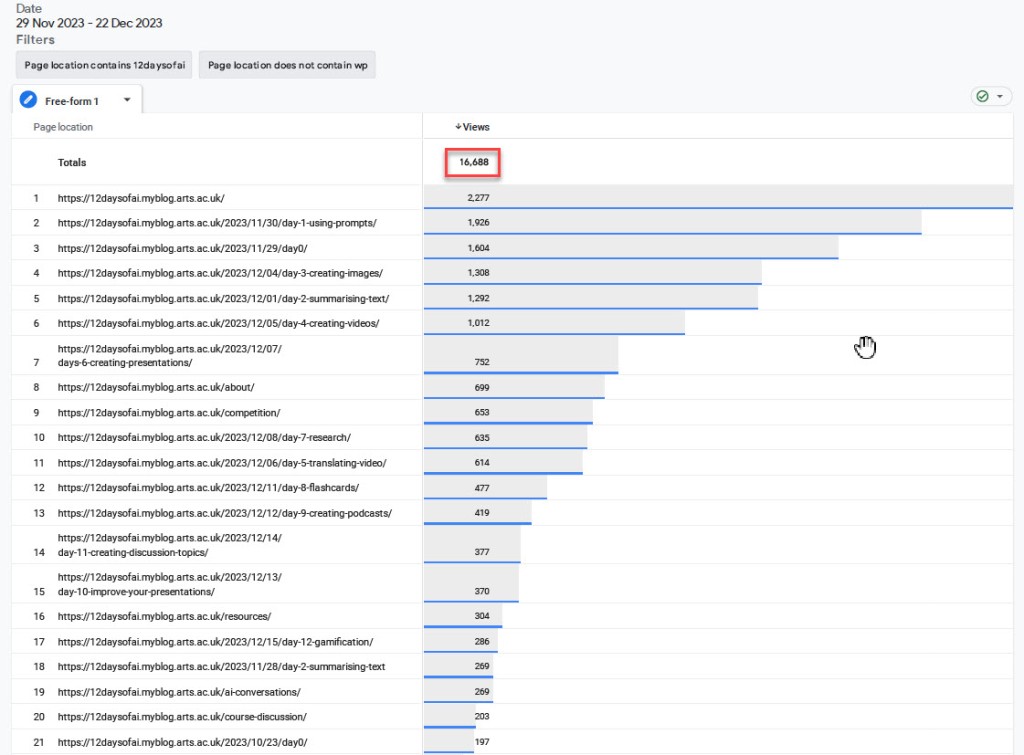

At the end of the 12 days I got Google Analytics to look at the blog site (actually it was my colleague Phil Haines who did this):

As you can see from the image above in total the blog site got an amazing 16,688 views over the duration of the course! On the first day it 1,926 views. Its probably no surprise that the numbers dropped off over the 12 days but even by the last day it had 286 view.

Comments

The last bit of data I looked at was the comments section in the blog. In total, over the course there were 458 comments posted on the 12DoAI site:

The number of comments drops off quite significantly over the 12 days – I’ll talk about this more in a further blog post.

That’s probably enough for one blog post today – in my next post I’ll look at ‘Lessons learnt’ and ‘’What next’. Please leave a comment to this post especially if you participated in the 12DoAI course – thanks!